Hardware Requirements for Nvidia’s Chat with RTX

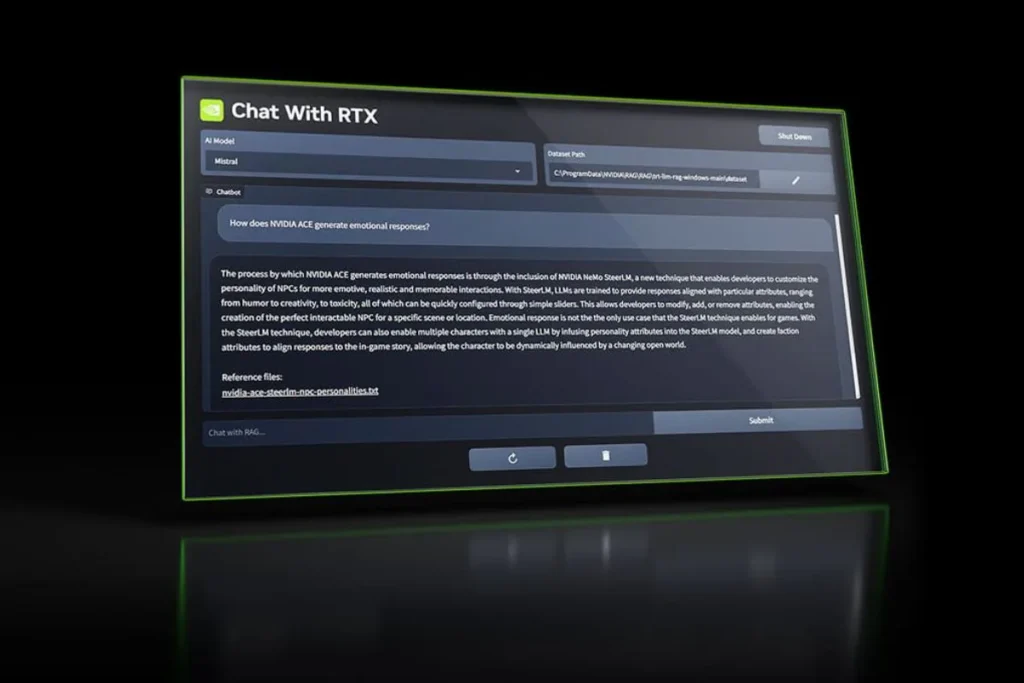

Nvidia’s Chat with RTX, an AI-powered chatbot, represents a significant advancement in local AI processing. Unlike many other chatbots that rely on cloud servers for processing, Chat with RTX runs entirely on a Windows PC, eliminating the need for an internet connection. This marks a notable shift in the landscape of AI applications, offering users greater privacy and control over their data. Released as a demo app, Chat with RTX showcases Nvidia’s commitment to pushing the boundaries of AI technology.

To utilize Chat with RTX, users must have a Windows PC or workstation equipped with an RTX 30 or 40-series GPU, boasting a minimum of 8GB VRAM. Once installed, the app provides a seamless user experience, requiring only a few clicks to set up and begin using. Despite its local processing, Chat with RTX offers robust functionality, allowing users to personalize their interactions by inputting personal data such as documents and files.

One of the standout features of Chat with RTX is its ability to analyze and summarize large volumes of text-based data quickly and efficiently. By leveraging AI algorithms, the chatbot can sift through documents, PDFs, and even YouTube videos to extract relevant information and provide insightful responses. This capability makes it an invaluable tool for tasks such as research, data analysis, and information retrieval.

Furthermore, Chat with RTX supports a wide range of file formats, including text, PDF, DOC/DOCX, XML, and YouTube video URLs. This versatility enables users to leverage the chatbot across various applications and workflows, enhancing productivity and efficiency. Whether summarizing research papers, analyzing market trends, or extracting insights from multimedia content, Chat with RTX offers a powerful solution for AI-driven tasks.