Officials Warn Against Relying on AI Chatbots for Voting Information Ahead of U.S. Presidential Election

With just four days until the U.S. presidential election, government officials are urging voters to avoid relying on artificial intelligence chatbots for election-related information. The New York Attorney General’s office, led by Letitia James, issued a consumer alert on Friday, cautioning that AI-powered chatbots frequently provide incorrect voting information, which could mislead voters.

Testing conducted by the Attorney General’s office on multiple AI chatbots revealed that they often gave inaccurate responses to questions about voting processes, raising concerns that voters could lose their chance to vote if they follow misleading information. The alert emphasized the importance of using official sources to verify voting details as Election Day approaches, with the presidential race between Republican candidate Donald Trump and Democratic Vice President Kamala Harris showing a tight competition.

The increase in generative AI use has amplified fears about election misinformation, with AI-generated content and deepfakes on the rise. Clarity, a machine learning firm, reported a 900% increase in deepfake content over the past year. U.S. intelligence officials warn that some of this content is created or funded by foreign actors, including Russia, in attempts to influence the election.

Experts are particularly wary of misinformation risks associated with generative AI, a technology that rapidly gained popularity after OpenAI’s release of ChatGPT in late 2022. Large language models (LLMs) are known to produce unreliable information, often “hallucinating” or inventing details about critical voting-related topics like polling locations and voting methods. Alexandra Reeve Givens, CEO of the Center for Democracy & Technology, cautioned, “Voters categorically should not look to AI chatbots for information about voting or the election.”

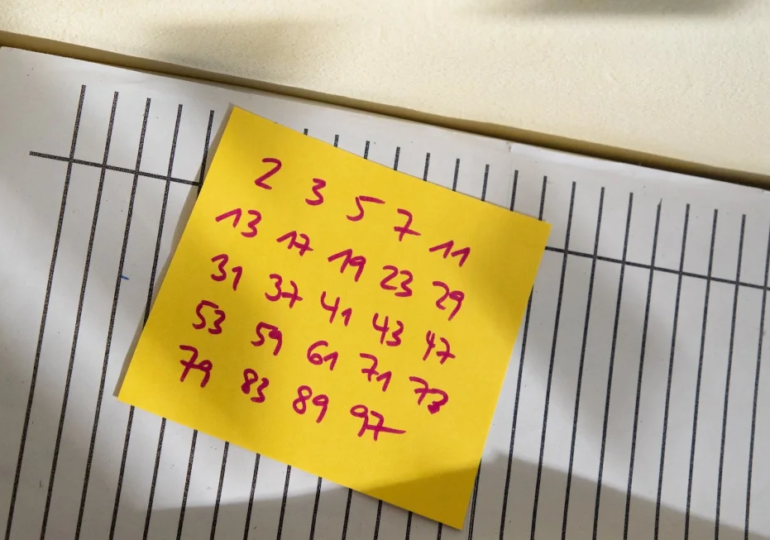

A study conducted by the Center for Democracy & Technology in July examined responses from major AI chatbots to 77 election-related questions, finding that more than one-third of the answers contained inaccuracies. Chatbots from companies like Mistral, Google, OpenAI, Anthropic, and Meta were included in the study. In response, an Anthropic spokesperson stated, “For specific election and voting information, we direct users to authoritative sources,” emphasizing that their chatbot, Claude, does not provide real-time updates on election details.

OpenAI announced it will begin prompting users who ask ChatGPT about election results to consult reliable news outlets like the Associated Press and Reuters, or to contact local election boards for accurate information. In a recent report, OpenAI disclosed efforts to counter misinformation, disrupting over 20 deceptive networks attempting to misuse their models for disinformation, though none of the election-related activities managed to gain significant traction.

Meanwhile, state legislators are taking steps to counteract AI-based election disinformation. Voting Rights Lab reported that as of November 1, there are 129 bills across 43 states that aim to regulate the spread of AI-generated misinformation related to elections.