YouTube is taking proactive steps to address the growing prevalence of artificial intelligence (AI)-generated content on its platform, signaling a concerted effort to uphold transparency and accountability within its ecosystem. As part of its ongoing commitment to fostering a trustworthy online environment, YouTube has implemented a new policy requiring creators to disclose any alterations made to videos using AI or generated through AI tools. This landmark announcement follows the platform’s decision to update its content policy in November 2023, underscoring its commitment to ensuring transparency in the era of AI-generated content.

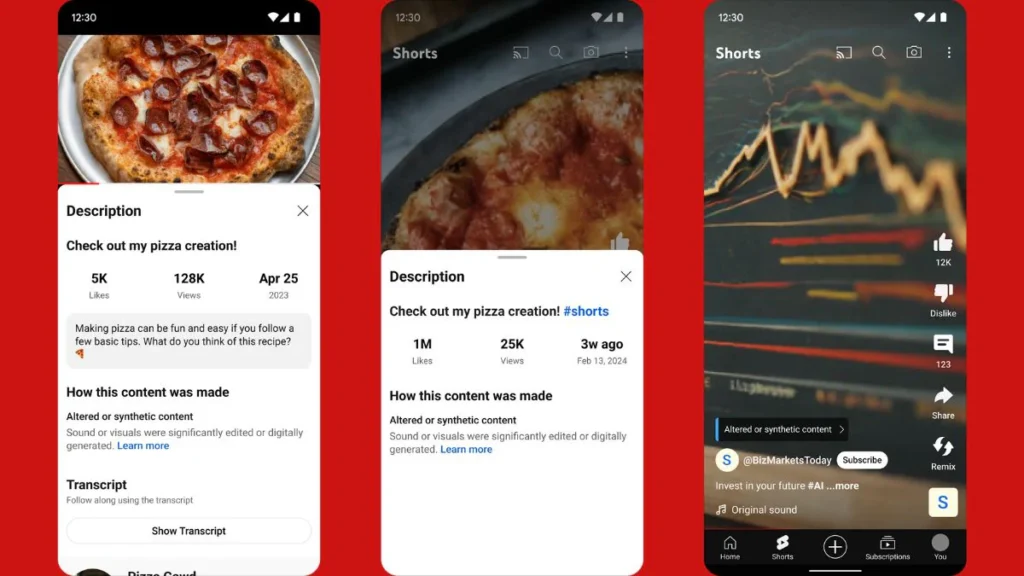

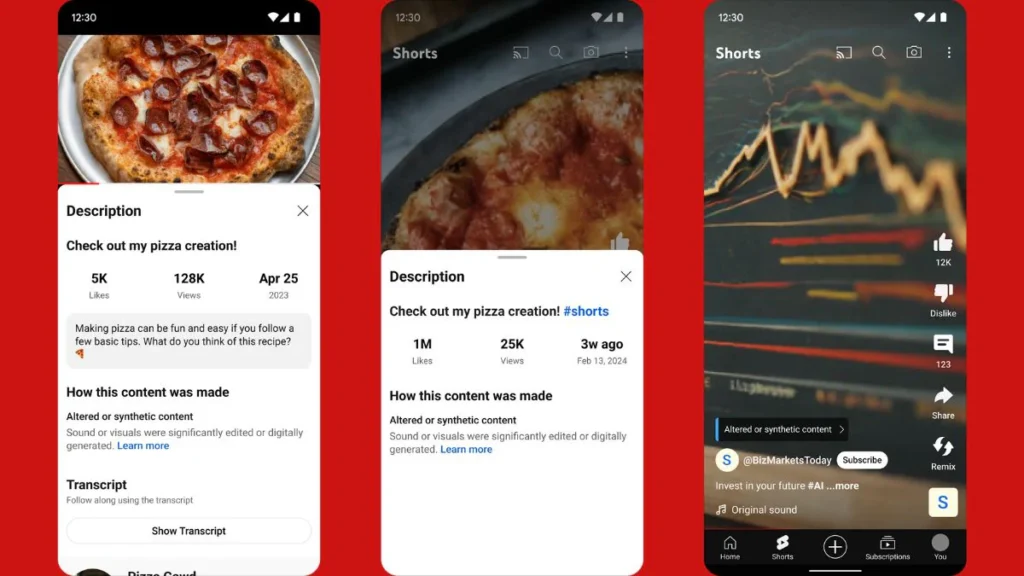

In a bid to streamline the disclosure process, YouTube has introduced a user-friendly tool designed to facilitate seamless integration of AI content disclosures into the video-uploading workflow. This innovative tool empowers creators to accurately and transparently communicate to viewers when a video has undergone significant alterations or has been synthesized using AI technology. By embedding this disclosure mechanism directly into the upload process, YouTube aims to simplify compliance with its disclosure requirements, ensuring consistency and clarity across all uploaded content.

Emphasizing its commitment to transparency and user trust, YouTube has also announced plans to autonomously apply labels to videos in cases where creators fail to disclose AI-generated content. This proactive measure is intended to safeguard users by providing clear and unambiguous information about the nature of the content they are consuming, particularly in instances involving sensitive subject matter. By automatically adding labels to videos lacking appropriate disclosures, YouTube aims to uphold its responsibility as a platform to promote informed decision-making and protect user interests.

In its official blog post unveiling these initiatives, YouTube reiterated its dedication to fostering a safe and transparent environment for creators and users alike. The platform affirmed, “We’re beginning to roll out a new tool today that will require creators to share when the content they’re uploading is meaningfully altered or synthetically generated and seems realistic.” This proactive approach reflects YouTube’s ongoing commitment to maintaining the integrity of its platform while empowering creators to responsibly navigate the evolving landscape of AI-generated content.

As YouTube continues to implement measures to enhance transparency and accountability, the rollout of these new tools represents a significant milestone in the platform’s journey toward fostering a culture of openness and integrity. By prioritizing transparency and user trust, YouTube aims to empower creators and users to engage with content responsibly while upholding the highest standards of ethical conduct and integrity.