Microsoft Postpones AI-Powered Recall Feature Again, Testing Planned for Later This Year

Microsoft Announces October Release for Recall Feature Testing with Windows Insiders

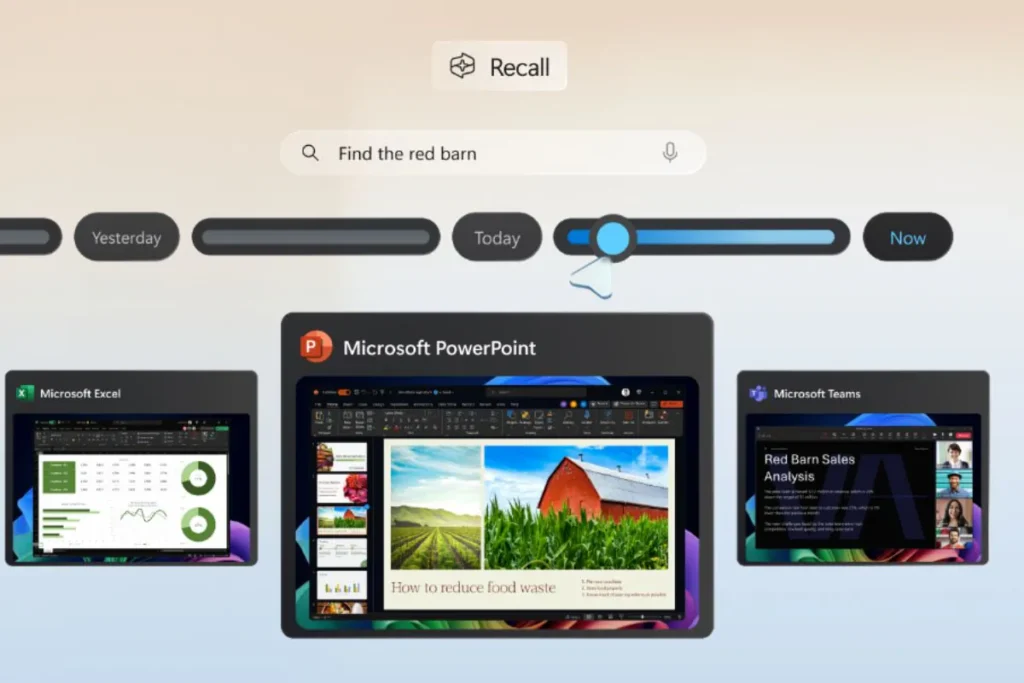

Microsoft has announced yet another delay for its artificial intelligence (AI) feature, Recall, as part of its ongoing efforts to enhance user privacy and security. Recall, a feature within the company’s Copilot+ PCs offering on the Windows operating system, allows users to track and view their on-device history through automatically captured screenshots. Initially unveiled in May, the feature was met with public concerns regarding privacy and safety, leading to a temporary rollback. While a preview release to Windows Insiders was planned for August, Microsoft has now pushed the launch to October, citing the need to provide a more “trustworthy and secure” user experience.

In its latest update, Microsoft confirmed that the Recall feature will be available for preview through the Windows Insider Program (WIP) to users with Copilot+ PCs starting in October. The company emphasized its commitment to ensuring user security and privacy, which has driven the decision to delay the rollout. Microsoft further noted that a detailed blog post will be published with additional information once the feature is released to beta testers, providing transparency and addressing any ongoing concerns about the new functionality.

However, Microsoft has yet to announce a definitive public release date for Recall. The company plans to rely on feedback from the Windows Insiders community to identify any potential issues or areas for improvement before making the feature widely available. This cautious approach underscores Microsoft’s focus on refining the user experience and addressing the privacy concerns that were raised during the initial announcement.

When Recall was first introduced, several users raised alarms over the fact that the screenshots captured by the feature were stored unencrypted on the device. This lack of encryption meant that anyone with access to the device could potentially view the stored screenshots, sparking a wave of public concern about data privacy. In response to these criticisms, Microsoft decided to recall the feature and committed to bolstering its security framework before reintroducing it.

In a follow-up blog post, Microsoft outlined the steps it has taken to enhance the security of the Recall feature. One of the key changes is making Recall an entirely opt-in feature, requiring explicit user consent before activation. This means that users will need to actively enable Recall, rather than having it turned on by default. Additionally, Microsoft has integrated Recall with Windows Hello, the company’s biometric authentication system that uses facial recognition, fingerprints, or PIN codes for device access. This integration is aimed at adding an extra layer of security to protect the user’s on-device history from unauthorized access.

The delay and Microsoft’s detailed response to the security concerns surrounding Recall demonstrate the company’s increased focus on user trust and data protection. As AI-driven features become more integral to operating systems and personal devices, ensuring that these features do not compromise user privacy is critical. Microsoft’s ongoing adjustments to Recall reflect its commitment to balancing innovation with responsible data handling practices. As the tech giant continues to refine Recall and gather feedback from beta testers, the final version will hopefully meet both its security standards and the expectations of its users.