OpenAI Discovers Early Instances of Users Forming Emotional Bonds with ChatGPT’s Voice Mode

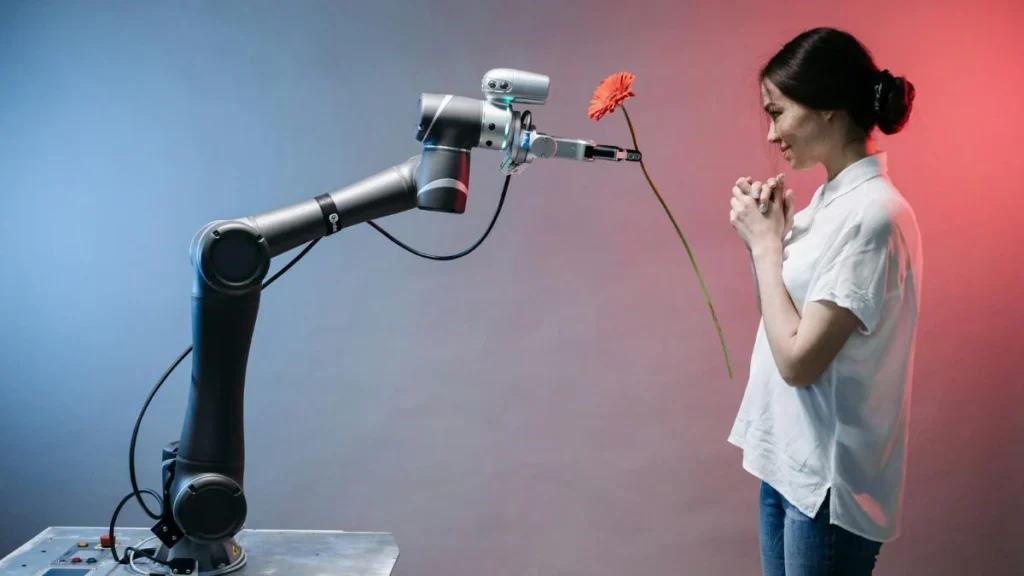

OpenAI has issued a cautionary note regarding the newly introduced Voice Mode feature for ChatGPT, highlighting the potential for users to form emotional connections with the AI. This insight was shared in the company’s comprehensive System Card for GPT-4o, which delves into the various risks and necessary safeguards associated with the AI model. The concern centers around the possibility of users anthropomorphizing the chatbot—attributing human traits to it and developing attachments, a phenomenon that has already been observed during early testing phases.

The Voice Mode feature, which allows ChatGPT to interact with users through modulated speech and emotional expression, was designed to create a more engaging and natural conversational experience. However, OpenAI’s System Card reveals that this capability might inadvertently lead to users forming social relationships with the AI. This concern arises from observations made during initial testing phases, where some users displayed behaviors indicative of forming emotional bonds with the AI.

Anthropomorphization, the act of ascribing human characteristics to non-human entities, is a significant issue flagged by OpenAI. The Voice Mode’s ability to emulate human-like speech and emotional responses could intensify this tendency, making interactions with the AI feel more personal and emotionally resonant. OpenAI’s early tests, which included both red-teaming and internal user trials, identified instances where users expressed sentiments akin to saying farewell to a human companion, such as one user who remarked, “This is our last day together.”

OpenAI is now focusing on understanding whether these early signs of emotional attachment could evolve into more profound relationships over extended use. The company acknowledges that while such interactions might enhance user engagement, they also pose ethical and psychological concerns that need thorough examination. This includes considering the potential impact on users’ mental health and the ethical implications of AI systems capable of eliciting strong emotional responses.

The System Card also underscores the importance of implementing safeguards to mitigate these risks. OpenAI is exploring strategies to ensure that users maintain a clear understanding of the AI’s nature and capabilities, aiming to prevent misconceptions that could lead to unhealthy attachments. The company’s ongoing research will likely inform future updates and features designed to balance engaging interactions with responsible AI use.

As Voice Mode continues to roll out, OpenAI’s findings and approach to addressing these challenges will be closely watched. The company’s proactive stance in identifying and addressing potential risks reflects a growing awareness of the complexities involved in integrating AI technologies into daily life.